HPC Clusters

This exercise focuses on using HPC clusters for large-scale data analysis (e.g., Next Generation Sequencing, genome annotation, evolutionary studies). These clusters contain multiple processors with large amounts of RAM, making them ideal for computationally intensive tasks. The operating system is primarily UNIX, accessed via the command line. All the commands you’ve learned in previous exercises can be used here.

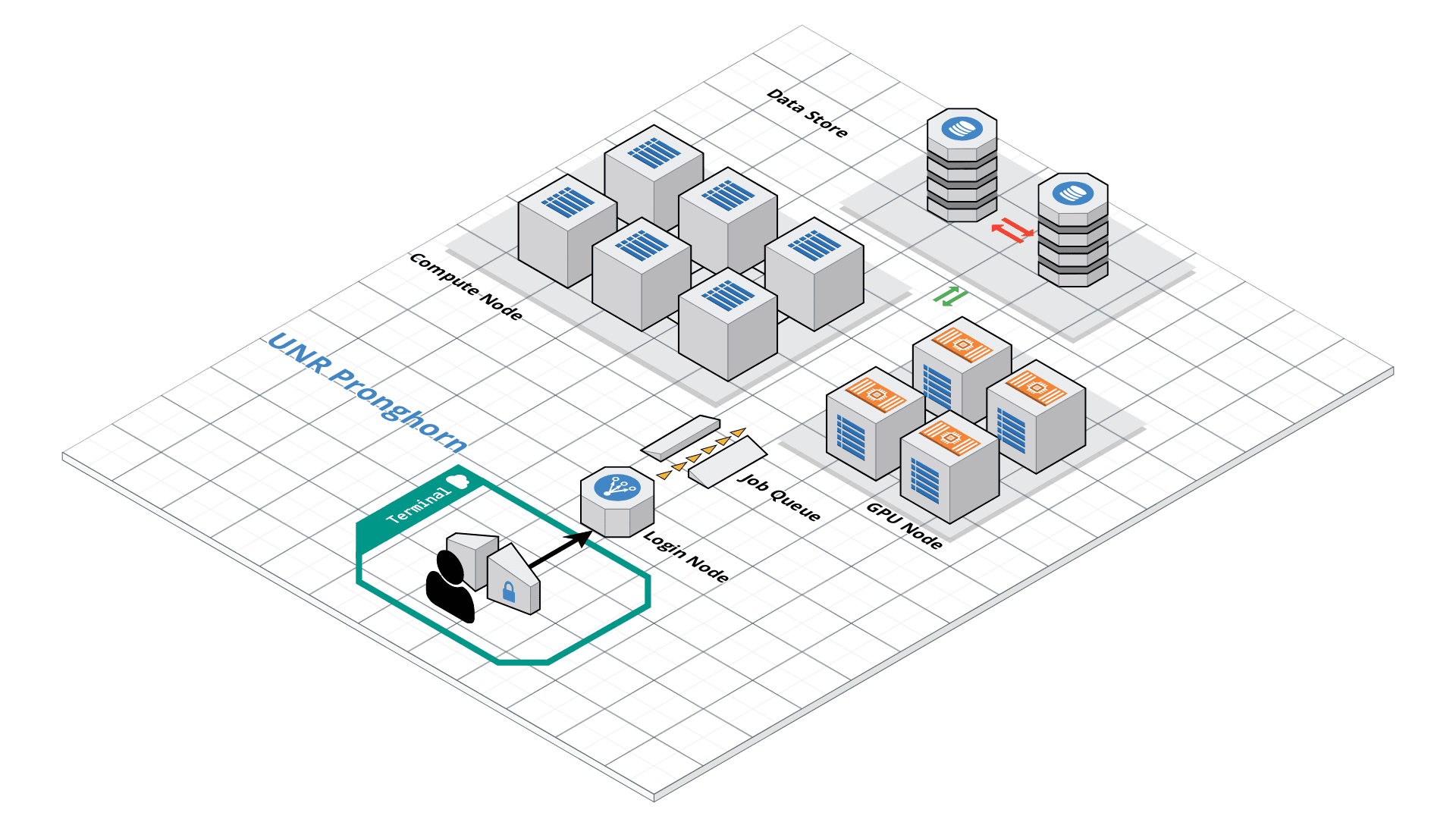

Pronghorn High Performance Computing offers shared infrastructure for researchers and students at UNR. You can find available resources here. Request access through your department or advisor. All attendees of this workshop will have their accounts set up on the HPC class education cluster using their UNR NetID and password. You should have received a confirmation email with connection instructions. This exercise covers connecting to a remote HPC server, transferring files, and running programs by requesting resources.

To log into the HPC front-end/job-submission system (pronghorn.rc.unr.edu), use your UNR NetID and password. Windows users will need an SSH client, while Mac/Linux users have SSH built-in.

ssh <YOUR_NET_ID>@pronghorn.rc.unr.edu

## First login will prompt for key confirmation. Choose 'yes.'

Pronghorn HPC Cluster Overview

Pronghorn is UNR’s new GPU-accelerated HPC cluster, supporting general research across NSHE. Comprising CPU, GPU, and storage subsystems, Pronghorn’s main features include:

- CPU Partition: 93 nodes, 2,976 CPU cores, 21TiB of memory.

- GPU Partition: 44 NVIDIA Tesla P100 GPUs, 352 CPU cores, 2.75TiB of memory.

- Storage: 1PB high-performance storage using IBM SpectrumScale.

Pronghorn is located at Switch Citadel Campus, 25 miles east of UNR. Switch is renowned for sustainable data center operations.

File Transfer Methods

Several methods exist for transferring files to/from HPC clusters, including command-line tools (scp, rsync) and graphical clients (SCP, SFTP). For secure copying:

Transferring from Local to Remote System

scp <source_file> <username>@pronghorn.rc.unr.edu:<target_location>

Example:

mkdir ~/bch709

cd ~/bch709

echo "hello world" > test_uploading_file.txt

scp test_uploading_file.txt <username>@pronghorn.rc.unr.edu:~/

Transferring from Remote to Local System

scp <username>@pronghorn.rc.unr.edu:<source_file> <destination_file>

Example:

scp <username>@pronghorn.rc.unr.edu:~/test_downloading_file.txt ~/

Recursive Directory Transfer (Local to Remote)

scp -r <source_directory> <username>@pronghorn.rc.unr.edu:<target_directory>

Example:

scp -r ../bch709 <username>@pronghorn.rc.unr.edu:~/

Opening Location

- Windows (WSL)

cd ~/bch709 explorer.exe . - Mac

cd ~/bch709 open .

Rsync Usage

- Sync local directory to remote server:

rsync -avhP <source_directory> <username>@pronghorn.rc.unr.edu:<target_directory> - Sync remote directory to local:

rsync -avhP <username>@pronghorn.rc.unr.edu:<source_directory> <target_directory>

Conda Installation on Pronghorn

Install Miniconda3 using the following commands:

curl -O https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh

bash Miniconda3-latest-Linux-x86_64.sh

Follow the on-screen instructions to complete the installation.

Customizing the Bash Prompt for Pronghorn

echo '###BCH709' >> ~/.bashrc

echo 'tty -s && export PS1="\[\033[38;5;164m\]\u\[$(tput sgr0)\]\[\033[38;5;15m\] \[$(tput sgr0)\]\[\033[38;5;231m\]@\[$(tput sgr0)\]\[\033[38;5;15m\] \[$(tput sgr0)\]\[\033[38;5;2m\]\h\[$(tput sgr0)\]\[\033[38;5;15m\] \[$(tput sgr0)\]\[\033[38;5;172m\]\t\[$(tput sgr0)\]\[\033[38;5;15m\] \[$(tput sgr0)\]\[\033[38;5;2m\]\w\[$(tput sgr0)\]\[\033[38;5;15m\]\n \[$(tput sgr0)\]"' >> ~/.bashrc

echo "alias ls='ls --color=auto'" >> ~/.bashrc

source ~/.bashrc

Using Conda Environment

conda create -n RNASEQ_bch709 -c bioconda -c conda-forge sra-tools minimap2 trinity star trim-galore gffread seqkit kraken2 samtools multiqc subread

conda activate RNASEQ_bch709

Connecting Scratch Disk

mkdir /data/gpfs/assoc/bch709-5/$(whoami)

cd /data/gpfs/assoc/bch709-5/$(whoami)

ln -s /data/gpfs/assoc/bch709-5/$(whoami) ~/scratch

Job Submission with SBATCH

Create a job submission script named submit.sh:

nano submit.sh

Add the following content:

#!/bin/bash

#SBATCH --job-name=test

#SBATCH --mail-type=ALL

#SBATCH --mail-user=<YOUR_EMAIL>

#SBATCH --ntasks=1

#SBATCH --mem=1g

#SBATCH --time=8:10:00

#SBATCH --account=cpu-s5-bch709-5

#SBATCH --partition=cpu-core-0

for i in {1..1000}; do

echo $i;

sleep 1;

done

Submit the job:

chmod 775 submit.sh

sbatch submit.sh

To cancel the job:

scancel <JOB_ID>

SRA

Sequence Read Archive (SRA) data, available through multiple cloud providers and NCBI servers, is the largest publicly available repository of high throughput sequencing data. The archive accepts data from all branches of life as well as metagenomic and environmental surveys.

Searching the SRA: Searching the SRA can be complicated. Often a paper or reference will specify the accession number(s) connected to a dataset. You can search flexibly using a number of terms (such as the organism name) or the filters (e.g. DNA vs. RNA). The SRA Help Manual provides several useful explanations. It is important to know is that projects are organized and related at several levels, and some important terms include:

Bioproject: A BioProject is a collection of biological data related to a single initiative, originating from a single organization or from a consortium of coordinating organizations; see for example Bio Project 272719 Bio Sample: A description of the source materials for a project Run: These are the actual sequencing runs (usually starting with SRR); see for example SRR1761506

Publication (Arabidopsis)

SRA Bioproject site

https://www.ncbi.nlm.nih.gov/bioproject/PRJNA272719

Runinfo

| Run | ReleaseDate | LoadDate | spots | bases | spots_with_mates | avgLength | size_MB | AssemblyName | download_path | Experiment | LibraryName | LibraryStrategy | LibrarySelection | LibrarySource | LibraryLayout | InsertSize | InsertDev | Platform | Model | SRAStudy | BioProject | Study_Pubmed_id | ProjectID | Sample | BioSample | SampleType | TaxID | ScientificName | SampleName | g1k_pop_code | source | g1k_analysis_group | Subject_ID | Sex | Disease | Tumor | Affection_Status | Analyte_Type | Histological_Type | Body_Site | CenterName | Submission | dbgap_study_accession | Consent | RunHash | ReadHash |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SRR1761506 | 1/15/2016 15:51 | 1/15/2015 12:43 | 7379945 | 1490748890 | 7379945 | 202 | 899 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761506/SRR1761506.1 | SRX844600 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820503 | SAMN03285048 | simple | 3702 | Arabidopsis thaliana | GSM1585887 | no | GEO | SRA232612 | public | F335FB96DDD730AC6D3AE4F6683BF234 | 12818EB5275BCB7BCB815E147BFD0619 | |||||||||||||

| SRR1761507 | 1/15/2016 15:51 | 1/15/2015 12:43 | 9182965 | 1854958930 | 9182965 | 202 | 1123 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761507/SRR1761507.1 | SRX844601 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820504 | SAMN03285045 | simple | 3702 | Arabidopsis thaliana | GSM1585888 | no | GEO | SRA232612 | public | 00FD62759BF7BBAEF123BF5960B2A616 | A61DCD3B96AB0796AB5E969F24F81B76 | |||||||||||||

| SRR1761508 | 1/15/2016 15:51 | 1/15/2015 12:47 | 19060611 | 3850243422 | 19060611 | 202 | 2324 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761508/SRR1761508.1 | SRX844602 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820505 | SAMN03285046 | simple | 3702 | Arabidopsis thaliana | GSM1585889 | no | GEO | SRA232612 | public | B75A3E64E88B1900102264522D2281CB | 657987ABC8043768E99BD82947608CAC | |||||||||||||

| SRR1761509 | 1/15/2016 15:51 | 1/15/2015 12:51 | 16555739 | 3344259278 | 16555739 | 202 | 2016 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761509/SRR1761509.1 | SRX844603 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820506 | SAMN03285049 | simple | 3702 | Arabidopsis thaliana | GSM1585890 | no | GEO | SRA232612 | public | 27CA2B82B69EEF56EAF53D3F464EEB7B | 2B56CA09F3655F4BBB412FD2EE8D956C | |||||||||||||

| SRR1761510 | 1/15/2016 15:51 | 1/15/2015 12:46 | 12700942 | 2565590284 | 12700942 | 202 | 1552 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761510/SRR1761510.1 | SRX844604 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820508 | SAMN03285050 | simple | 3702 | Arabidopsis thaliana | GSM1585891 | no | GEO | SRA232612 | public | D3901795C7ED74B8850480132F4688DA | 476A9484DCFCF9FFFDAADAAF4CE5D0EA | |||||||||||||

| SRR1761511 | 1/15/2016 15:51 | 1/15/2015 12:44 | 13353992 | 2697506384 | 13353992 | 202 | 1639 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761511/SRR1761511.1 | SRX844605 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820507 | SAMN03285047 | simple | 3702 | Arabidopsis thaliana | GSM1585892 | no | GEO | SRA232612 | public | 5078379601081319FCBF67C7465C404A | E3B4195AFEA115ACDA6DEF6E4AA7D8DF | |||||||||||||

| SRR1761512 | 1/15/2016 15:51 | 1/15/2015 12:44 | 8134575 | 1643184150 | 8134575 | 202 | 1067 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761512/SRR1761512.1 | SRX844606 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820509 | SAMN03285051 | simple | 3702 | Arabidopsis thaliana | GSM1585893 | no | GEO | SRA232612 | public | DDB8F763B71B1E29CC9C1F4C53D88D07 | 8F31604D3A4120A50B2E49329A786FA6 | |||||||||||||

| SRR1761513 | 1/15/2016 15:51 | 1/15/2015 12:43 | 7333641 | 1481395482 | 7333641 | 202 | 960 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761513/SRR1761513.1 | SRX844607 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820510 | SAMN03285053 | simple | 3702 | Arabidopsis thaliana | GSM1585894 | no | GEO | SRA232612 | public | 4068AE245EB0A81DFF02889D35864AF2 | 8E05C4BC316FBDFEBAA3099C54E7517B | |||||||||||||

| SRR1761514 | 1/15/2016 15:51 | 1/15/2015 12:44 | 6160111 | 1244342422 | 6160111 | 202 | 807 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761514/SRR1761514.1 | SRX844608 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820511 | SAMN03285059 | simple | 3702 | Arabidopsis thaliana | GSM1585895 | no | GEO | SRA232612 | public | 0A1F3E9192E7F9F4B3758B1CE514D264 | 81BFDB94C797624B34AFFEB554CE4D98 | |||||||||||||

| SRR1761515 | 1/15/2016 15:51 | 1/15/2015 12:44 | 7988876 | 1613752952 | 7988876 | 202 | 1048 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761515/SRR1761515.1 | SRX844609 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820512 | SAMN03285054 | simple | 3702 | Arabidopsis thaliana | GSM1585896 | no | GEO | SRA232612 | public | 39B37A0BD484C736616C5B0A45194525 | 85B031D74DF90AD1815AA1BBBF1F12BD | |||||||||||||

| SRR1761516 | 1/15/2016 15:51 | 1/15/2015 12:44 | 8770090 | 1771558180 | 8770090 | 202 | 1152 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761516/SRR1761516.1 | SRX844610 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820514 | SAMN03285055 | simple | 3702 | Arabidopsis thaliana | GSM1585897 | no | GEO | SRA232612 | public | E4728DFBF0F9F04B89A5B041FA570EB3 | B96545CB9C4C3EE1C9F1E8B3D4CE9D24 | |||||||||||||

| SRR1761517 | 1/15/2016 15:51 | 1/15/2015 12:44 | 8229157 | 1662289714 | 8229157 | 202 | 1075 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761517/SRR1761517.1 | SRX844611 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820513 | SAMN03285058 | simple | 3702 | Arabidopsis thaliana | GSM1585898 | no | GEO | SRA232612 | public | C05BC519960B075038834458514473EB | 4EF7877FC59FF5214DBF2E2FE36D67C5 | |||||||||||||

| SRR1761518 | 1/15/2016 15:51 | 1/15/2015 12:44 | 8760931 | 1769708062 | 8760931 | 202 | 1072 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761518/SRR1761518.1 | SRX844612 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820515 | SAMN03285052 | simple | 3702 | Arabidopsis thaliana | GSM1585899 | no | GEO | SRA232612 | public | 7D8333182062545CECD5308A222FF506 | 382F586C4BF74E474D8F9282E36BE4EC | |||||||||||||

| SRR1761519 | 1/15/2016 15:51 | 1/15/2015 12:44 | 6643107 | 1341907614 | 6643107 | 202 | 811 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761519/SRR1761519.1 | SRX844613 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820516 | SAMN03285056 | simple | 3702 | Arabidopsis thaliana | GSM1585900 | no | GEO | SRA232612 | public | 163BD8073D7E128D8AD1B253A722DD08 | DFBCC891EB5FA97490E32935E54C9E14 | |||||||||||||

| SRR1761520 | 1/15/2016 15:51 | 1/15/2015 12:44 | 8506472 | 1718307344 | 8506472 | 202 | 1040 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761520/SRR1761520.1 | SRX844614 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820517 | SAMN03285062 | simple | 3702 | Arabidopsis thaliana | GSM1585901 | no | GEO | SRA232612 | public | 791BD0D8840AA5F1D74E396668638DA1 | AF4694425D34F84095F6CFD6F4A09936 | |||||||||||||

| SRR1761521 | 1/15/2016 15:51 | 1/15/2015 12:46 | 13166085 | 2659549170 | 13166085 | 202 | 1609 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761521/SRR1761521.1 | SRX844615 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820518 | SAMN03285057 | simple | 3702 | Arabidopsis thaliana | GSM1585902 | no | GEO | SRA232612 | public | 47C40480E9B7DB62B4BEE0F2193D16B3 | 1443C58A943C07D3275AB12DC31644A9 | |||||||||||||

| SRR1761522 | 1/15/2016 15:51 | 1/15/2015 12:49 | 9496483 | 1918289566 | 9496483 | 202 | 1162 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761522/SRR1761522.1 | SRX844616 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820519 | SAMN03285061 | simple | 3702 | Arabidopsis thaliana | GSM1585903 | no | GEO | SRA232612 | public | BB05DF11E1F95427530D69DB5E0FA667 | 7706862FB2DF957E4041D2064A691CF6 | |||||||||||||

| SRR1761523 | 1/15/2016 15:51 | 1/15/2015 12:46 | 14999315 | 3029861630 | 14999315 | 202 | 1832 | https://sra-downloadb.be-md.ncbi.nlm.nih.gov/sos1/sra-pub-run-5/SRR1761523/SRR1761523.1 | SRX844617 | RNA-Seq | cDNA | TRANSCRIPTOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP052302 | PRJNA272719 | 3 | 272719 | SRS820520 | SAMN03285060 | simple | 3702 | Arabidopsis thaliana | GSM1585904 | no | GEO | SRA232612 | public | 101D3A151E632224C09A702BD2F59CF5 | 0AC99FAA6B8941F89FFCBB8B1910696E |

Subset of data

| Sample information | Run |

|---|---|

| WT_rep1 | SRR1761506 |

| WT_rep2 | SRR1761507 |

| WT_rep3 | SRR1761508 |

| ABA_rep1 | SRR1761509 |

| ABA_rep2 | SRR1761510 |

| ABA_rep3 | SRR1761511 |

SRA Data Access

SRA (Sequence Read Archive) is a repository of high-throughput sequencing data. To download sequencing data from SRA, use fastq-dump.

Running Fastq-Dump Job

Create and submit a script fastq-dump.sh to download RNA-Seq data:

mkdir ~/scratch/raw_data

nano fastq-dump.sh

#!/bin/bash

#SBATCH --job-name=fastqdump_ATH

#SBATCH --cpus-per-task=2

#SBATCH --time=2-15:00:00

#SBATCH --mem=16g

#SBATCH --mail-type=ALL

#SBATCH --mail-user=<YOUR_EMAIL>

#SBATCH -o fastq-dump.out

fastq-dump SRR1761506 --split-3 --outdir ~/scratch/raw_data --gzip

fastq-dump SRR1761507 --split-3 --outdir ~/scratch/raw_data --gzip

fastq-dump SRR1761508 --split-3 --outdir ~/scratch/raw_data --gzip

fastq-dump SRR1761509 --split-3 --outdir ~/scratch/raw_data --gzip

fastq-dump SRR1761510 --split-3 --outdir ~/scratch/raw_data --gzip

fastq-dump SRR1761511 --split-3 --outdir ~/scratch/raw_data --gzip

Trimming Reads with Trim-Galore

Submit a trimming job:

nano trim.sh

#!/bin/bash

#SBATCH --job-name=trim_ATH

#SBATCH --cpus-per-task=2

#SBATCH --time=2-15:00:00

#SBATCH --mem=16g

#SBATCH --mail-type=ALL

#SBATCH --mail-user=<YOUR_EMAIL>

#SBATCH -o trim.out

trim_galore --paired --three_prime_clip_R1 5 --three_prime_clip_R2 5 --cores 2 --max_n 40 --gzip -o trim --basename SRR1761506 raw_data/SRR1761506_1.fastq.gz raw_data/SRR1761506_2.fastq.gz --fastqc

trim_galore --paired --three_prime_clip_R1 5 --three_prime_clip_R2 5 --cores 2 --max_n 40 --gzip -o trim --basename SRR1761507 raw_data/SRR1761507_1.fastq.gz raw_data/SRR1761507_2.fastq.gz --fastqc

trim_galore --paired --three_prime_clip_R1 5 --three_prime_clip_R2 5 --cores 2 --max_n 40 --gzip -o trim --basename SRR1761508 raw_data/SRR1761508_1.fastq.gz raw_data/SRR1761508_2.fastq.gz --fastqc

trim_galore --paired --three_prime_clip_R1 5 --three_prime_clip_R2 5 --cores 2 --max_n 40 --gzip -o trim --basename SRR1761509 raw_data/SRR1761509_1.fastq.gz raw_data/SRR1761509_2.fastq.gz --fastqc

trim_galore --paired --three_prime_clip_R1 5 --three_prime_clip_R2 5 --cores 2 --max_n 40 --gzip -o trim --basename SRR1761510 raw_data/SRR1761510_1.fastq.gz raw_data/SRR1761510_2.fastq.gz --fastqc

trim_galore --paired --three_prime_clip_R1 5 --three_prime_clip_R2 5 --cores 2 --max_n 40 --gzip -o trim --basename SRR1761511 raw_data/SRR1761511_1.fastq.gz raw_data/SRR1761511_2.fastq.gz --fastqc

References:

- Conda Documentation: https://docs.conda.io/en/latest/

- BioConda: https://bioconda.github.io/ ```